A guy I went to school with at UNC, Todd Stohs, has created his own college football ranking system, available here. His computer puts Marshall in the top 10 and Kentucky in the top 25, both of which are optimistic.

Category Archives: Research

Forecasting Attendance

I’m starting a project in which I attempt to build a model to forecast the attendance at a sporting event. Historical data will go in and future forecasts will pop out. I’m interested in predicting total attendance at each game.

Here are some variables that I think might effect the attendance at a given game:

-Sport

-Home Team

-Away Team

-Stadium/Location

-Month

-Weekday

-Time of game

-Home Record

-Home Playoff Chance

-Away Record

-Away Playoff Chance

-Number of home game (home openers obviously well attended)

-Weather

-Temperature

-Indoor/outdoor stadium

-Attendance Capacity

-Promotions

-TV Coverage of game

-Is it a holiday or holiday weekend?

-In-division game? Rivalry game?

-Is away team defending divisional/conference/league champion?

-Do teams rarely play? Intra-league game?

-Current injuries to key starters

I’m trying to be general, so that similar variables work across sports. Some of them (indoor/outdoor, for example) obviously don’t make sense in every sport. These variables will probably feed into some form of regression model, so the variables “Home Team” and “Stadium/location” will incorporate a lot of fixed effects of the team: size of fan base, interest in that sport in that city, average cost of tickets, etc.

So, am I missing any variables that would help predict the attendance at a game? Help me out.

How to bulk download Batter vs. Pitcher Data

I worked on a project in a Complex Systems class where I wanted to know if there was any value in looking at the network of at-bats in baseball. To create this network, I assumed that if a batter got on base via hit, HBP, or walk, the batter won the at-bat and I drew a link from the pitcher to the batter. If the pitcher got the batter out, I said the pitcher won the at-bat and drew a link from the batter to the pitcher in the network. This created a directed graph that I could run networked statistics on, such as PageRank. I wanted to know how well the rank of a player in summary statistics (ERA, AVG, OBP, WAR, etc) matched up with the rank of the player in PageRank. PageRank, in this context, puts value upon beating other players with high PageRank. So, when playing the Dodgers, getting a hit off of Clayton Kershaw last year was worth more than getting a hit off of Chris Capuano. Did good players overperform or underperform against other good players? Does this have value for predicting playoff success? These were some of my questions as I started my study.

To get results of batter/pitcher matchups, I crawled Baseball-Reference.com. Their Play Index Tool lets you look up the results of any players’ batting/pitching matchups, possibly filtered by year. I wanted to download every at-bat for the year 2013.

To begin with, I’m not sure Baseball-Reference.com wanted me to crawl their records. They have disclaimers against this sort of bulk downloading, but I was using the data for a personal project and didn’t profit from it, so I went ahead. They didn’t kick off my IP as I went about crawling/downloading these matchups.

My code for this project is in Python. I used the screen-scraping package Beautiful Soup.

I first had to grab the usernames for all players in the majors in 2013. I went to this page to get the batters and this page to get the pitchers. Looking at the page source for the pitching page, you notice that the usernames start around line 1727. Download the page source for those pages and use some logic to grab all the usernames for pitchers and batters. Here is my ugly code to parse the usernames.

Once you have the usernames, you’ll want to crawl Baseball-Reference.com to get matchup data from every batter. Unfortunately, a batter’s matchup data (like this for Barry Larkin) creates the same page source whether you filter by year or not. Filtering by year only dynamically changes what is shown on the screen; it doesn’t change the page source, which is what we are going to crawl. So we have to use three steps to get only 2013 data:

-Parse the page source for a batter’s alltime matchups to see which pitchers he ever faced

-For each pitcher, see if that pitcher is in the list of 2013 pitchers

-If it is, crawl ‘http://www.baseball-reference.com/play-index/batter_vs_pitcher.cgi?batter=’+batter+’&pitcher=’+pitcher to get the line related to 2013. Add this line to your statistics that you are keeping.

Here is my Python code to download all at-bats from 2013. You’ll notice that I import urlopen from urllib2 to tell Python to open the webpages of interest. Then I use Beautiful Soup to parse the page source. Throughout the code, I added in lines like “time.sleep(random.random()*10)” from the time package to make the code delay a random amount of time. This kept me from overloading Baseball-Reference.com with requests and hopefully kept me from pissing them off. If you’re interested in using the code, note that you’ll obviously need to change your input/output folders to match your computer.

Hope this helps. I know it’s not 100% complete in its description, but post in the comments if you’re confused in some way.

Part 2 of Sacramento Kings Crowdsourcing their Draft Analytics

Part one of Grantland’s video showing the Kings crowdsourcing their draft analytics.

Here’s part two, in which the Kings fail to do anything other than draft Nik Stauskas. Success?

https://www.youtube.com/watch?v=rEN6ad_Aw-M

Betting on Underdogs in the NFL

I recently read the paper “Herd behaviour and underdogs in the NFL” by Sean Wever and David Aadland. It describes the phenomenon whereby bettors overvalue favorites in the NFL. They speculate briefly that this is due to the media and analysts over-hyping certain popular teams and under-estimating the parity that exists in the NFL. They create a model that suggests that betting on certain longshot underdogs will result in a positive expected betting profit. Because you typically wager $110 to win $100 when betting against the spread, you have to win more than 52.38% of the time to make money.

They fit their model on data from 1985-1999, and the model recommended betting on home underdogs when the spread was +6.5 or more and away underdogs when the spread was -10.5 or less. If they had used this betting strategy from 2000-2010, they would have made 427 bets, of which 246 would have won. That’s a winning percentage of 57.61%. If they bet $110 on each of those 427 games, they would have made $4690 over the 10 years, thus returning about $11 profit on each $110 bet. That’s a 10% return on investment, if I’m not mistaken. Pretty impressive.

Scout Scheduling

Interesting cover story in the most recent Analytics magazine from INFORMS. It tells the story of how The Perduco Group, a small defense consulting firm out of Dayton, Ohio, started offering services in the field of sports analytics. Yes, right now it appears the firm only has one lead analyst in the sports realm, but they still have a variety of interesting services/offerings.

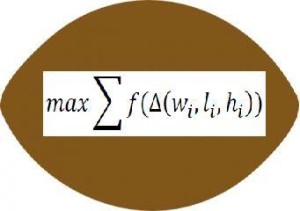

The most interesting offering in the sports realm was in the scheduling of the travel of scouts. It makes sense that you would want to maximize the time your scouts spend viewing high value recruits while minimizing travel costs. Once schedules and players of interest are inputted, it seems like a great area for automation/optimization. Great idea.

A small company that focuses on defense and sports. Seems right up my alley.

(Image from The Perduco Group’s website http://www.theperducogroup.com/#!scout-scheduling/c1gq4. Click on the image to see it a bit larger.)

Directional Statistics

At Booz Allen in 2012, I needed to find the average time of day that something happened as part of my code. Taking the normal (linear) average of times of day doesn’t work because there is always a discontinuity somewhere, usually midnight. Since times of day are periodic, 11:59 pm and 1:01 am should average to midnight, not noon. But they’ll average to noon (1200) if you average something like 2359 (military time) and 0001.

I thought we had stumbled on to an area of mathematics that needed further study: finding the average and variance of periodic variables. Because all of my internet searches were things like “how to average periodic variables” and “average time of day” and the like, I could not find anything on the internet about how to solve this problem. So I started to derive an answer and ended up with something very similar to what I now learn is called Directional Statistics. Wiki article on directional statistics. I never thought to search for anything like “Directional Statistics” or “Circular Statistics” or anything similar, so I didn’t find Directional Statistics until Dave Anderson showed them to me this week.

The basic idea (that AJ Mobley, Dave, and I derived at Booz Allen) is that you treat times of day as points on the unit circle, and then average the points in two-dimensions. Invert the “average point” to get the average time of day. Additionally, the farther the point is from the origin, the lower the variance of the points.

Reds Caravan Reveals Team Employs 3 Programmers

The Reds Caravan rolled through Bloomington yesterday. This edition of the traveling side-show featured Marty Brennaman, Eric Davis, Assistant GM Bob Miller, new guy Brayan Pena, minor leaguer Tucker Barnhart, and Big Red Machine glue-guy Doug Flynn. After Marty introduced everyone, he opened up the floor to questions. I asked Bob Miller about the status of the Reds’ Analytics efforts. He tried to convey the vast amount of data that the team collects, including over 90 data points for every pitch thrown. The Director of Baseball Research/Analysis is Sam Grossman, who heads a team of three programmers. The team also employs over 20 scouts, which are especially necessary for understanding high school and foreign talent where the data on the player’s performance is sparser/non-existant.

While I appreciate the honest and helpful answer from Mr. Miller, I wonder whether having three people doing analytics for a team that is going to spend $100M+ each year on player payroll is enough. Do other teams have more analytics professionals? The Reds, under the Dusty Baker regime, tended to ignore a lot of largely accepted analytics wisdom:

-Baker consistently batted his shortstop in the top 2 spots in the order, despite the Reds not having an above-average bat playing shortstop

-The Reds left Aroldis Chapman, one of the most dominant pitchers in baseball, to languish in the closer roll for 2 years, where he pitched a total of 135 innings over 2 years, having a minimal effect on the game. Mike Leake, the Reds’ 5th starter, registered 371 innings in those 2 years.

-Baker wanted his hitters to be aggressive at the plate, which lowered their walk rate, sometimes to comical levels. Getting on base is important, and walks are a way to get on base.

I’d like to see the Reds become more cutting-edge in accepting data-driven wisdom that will improve their team’s performance. As a skilled analytics developer, its frustrating for me to see my team frequently mocked by those individuals who work full-time in baseball analytics. Maybe they’ll hire me as a consultant. I can fix them.

Maria wrote a wrap-up of the Bloomington Caravan stop for Redleg Nation. You should check it out here!

Task Switching

As a PhD student taking classes, I have a lot of tasks to switch between and focus on throughout the day. Homeworks, projects, papers to read, textbooks to read, the internet. I feel like I lose a lot of time, energy, and willpower over-thinking what I should work on next. I wonder if I should just pre-prioritize my tasks at the beginning of each day or week, and then only go the next task once the first is finished (or I am stuck without progress). It seems like it would be a big investment to start a prioritization effort like that (setup costs and commitment costs). Maybe next week…

The blog Study Hacks has multiple discussions about productivity.